Global S&T Development Trend Analysis Platform of Resources and Environment

| The unforeseen acceptance of deepfakes | |

| admin | |

| 2022-01-17 | |

| 发布年 | 2022 |

| 语种 | 英语 |

| 国家 | 法国 |

| 领域 | 地球科学 ; 资源环境 |

| 正文(英文) |

Rapid improvements in deepfake technology, which makes it possible to modify a person's appearance or voice in real time, call for an ethical review at this still early stage of its use. Researchers working in the field of cognitive science shed some light on the public’s perception of this phenomenon.

Metamoworks / Adobe Stock

Video conferencing, live streaming, and platforms such as Zoom and Tiktok have grown in popularity. Algorithms embedded inside these services make it possible for users to create deepfakes, including live, which change their appearance and voice. In the very near future, it could become difficult to tell whether the person we are chatting with online is really who we see on the screen or whether they are using a voice or face changer.

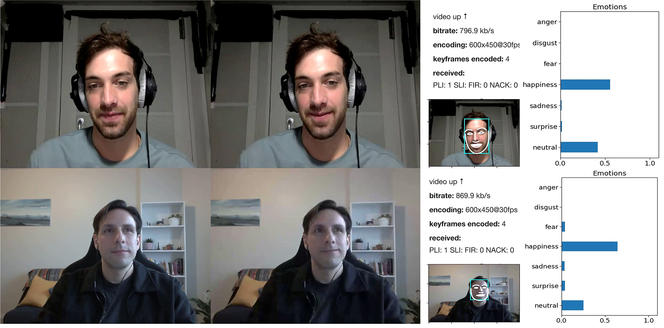

To find out, we conducted a survey whose findings were recently published in Philosophical Transactions of the Royal Society B. The study presented different hypothetical applications of expressive voice transformation to a sample of some three hundred predominantly young French urban students, potential users of these technologies in the future. “Ethically acceptable” usesThese scenarios, styled to resemble Black Mirror, a dystopian science fiction television series, included, among others, a politician trying to sound more convincing or a call centre employee able to modify the voices of unhappy customers in order to ease the upset caused by aggressive behaviour. Situations where the technology was used in a therapeutic context were also presented; namely a patient suffering from depression transformed their voice to sound happier when speaking to family, or a person experiencing stress listened to a calm version of their own voice. These situations were inspired by previous research where we studied manners of speaking perceived as honest, as well as research by the start-up Alta Voce.1 What started out as simple laboratory experiments have taken on a much more concrete dimension since the health crisis and the development of video conferencing tools.

Provided they were told of such modification beforehand, our participants showed no difference in acceptance whether the speech transformations applied to themselves or to other people they listened to. Yet regarding technologies such as self-driving vehicles, the subjects of our study would prefer not to buy a car that would spare a pedestrian rather than the driver in the event of an accident, despite agreeing that this would be the most ethically acceptable option. Our survey also shows that the more familiar people are with these new uses of emerging technologies, through books or science fiction programmes for instance, the more morally justifiable they find them. This creates the conditions for their widespread adoption, despite the "techlash" (contraction of technology and backlash) observed in recent years, including growing animosity towards big tech. A clear risk of manipulationUse of these technologies can be perfectly harmless, if not virtuous. In a therapeutic context, we are developing voice changers aimed at PTSD sufferers, or at studying the reaction of patients in a coma to voices made to sound “happy”.2 These techniques, however, also present obvious risks, such as identity theft on dating apps or on social media, as in the case of the young motorcycle influencer known as @azusagakuyuki who turned out to be a 50-year-old man. Wider societal threats include increased discrimination with, for example, the possible use by companies of masking technology to neutralise their customer service employees’ foreign accents. The points of view, opinions and analyses expressed in this article are the sole responsibility of the author and do not in any way constitute a statement of position by the CNRS. |

| URL | 查看原文 |

| 来源平台 | CNRS News |

| 文献类型 | 新闻 |

| 条目标识符 | http://119.78.100.173/C666/handle/2XK7JSWQ/344579 |

| 专题 | 地球科学 资源环境科学 |

| 推荐引用方式 GB/T 7714 | admin. The unforeseen acceptance of deepfakes. 2022. |

| 条目包含的文件 | 条目无相关文件。 | |||||

| 个性服务 |

| 推荐该条目 |

| 保存到收藏夹 |

| 查看访问统计 |

| 导出为Endnote文件 |

| 谷歌学术 |

| 谷歌学术中相似的文章 |

| [admin]的文章 |

| 百度学术 |

| 百度学术中相似的文章 |

| [admin]的文章 |

| 必应学术 |

| 必应学术中相似的文章 |

| [admin]的文章 |

| 相关权益政策 |

| 暂无数据 |

| 收藏/分享 |

除非特别说明,本系统中所有内容都受版权保护,并保留所有权利。

修改评论